There are lots of tricky problems when it comes to generating high-quality automated reports, and repetition is one of the toughest. Repetition is a difficult problem for automated writing systems.

Do those sentences read well together? I’m guessing you probably think ‘no’. They seem pretty clearly repetitive, but that’s only obvious from a human perspective. From a computer’s perspective, however, it’s not so clear.

Why that is and how we can try to get around that will be the subject of this post. This one will (hopefully) be part of a series of posts where I go into a bit more detail about the technical challenges that underlie high-quality data-focused generative AI. A lot of these things are problems, like repetition, that are hard to even notice if you haven’t spent time in the AI trenches, as we don’t think twice about them as humans.

First, why is repetition even an issue in the first place? If you build automated reports using templates, it isn’t. That’s because you know exactly what stories are going to appear at each point in a narrative, so you can use the awesome repetition fighting powers of your human brain to make sure that the template avoids any repetition.

Using a template is severely restricting, however, because the template can’t flexibly adapt to the underlying data, and therefore can’t possibly report on the most important information that the reader needs to see. The best way to set up an automated report is to allow the system to individually identify each event within the data set and then build a narrative out of only the best parts.

However, once you’ve freed the software from templates and given it flexibility in how it arranges information, you’ve also summoned the Kraken that is repetition. To understand how tricky that problem can be, let’s paraphrase the pair of sentences that started this post:

There are lots of high-scoring wide receivers in the NFC, and DeAndre Hopkins is one of the best. DeAndre Hopkins is a good fantasy wide receiver.

We’d say this is repetitive because of the double mention of DeAndre Hopkins being a good receiver, but let’s look at it from a computer’s perspective. The first sentence is actually made of two parts: (1) identifying that there are many high-scoring WRs in the NFC, and (2) saying DeAndre Hopkins is good. The second sentence is just about DeAndre Hopkins being good. For software, these two sentences are not the same, since the first has two components and the second sentence has just one. Ah, you say, but what if we give software the ability to recognize each of the two subcomponents of the first sentence so that it can understand that it conflicts with the second sentence? Well, that’s a good idea in general, but it won’t save you in this case, because the two sentences in this example don’t even share the same sub-component.

The first sentence says that DeAndre Hopkins is ‘one of the best’ WRs while the second sentence merely identifies DeAndre Hopkins as being good. The issue here is that in order to get software to write these sentences you would need to build the capability to have it both identify a ‘good’ WR and also rank order them and identify some subset that would be considered a grouping of ‘the best’. These are two different operations, so the system would not inherently see them as being the same thing.

This is an example of a conceptual repetition problem, where there are two events or stories identified by an NLG system that are different (including involving different calculations and a different ‘trigger’) but are conceptually similar enough that it doesn’t make sense to include them both in the same report.

Building a conceptual hierarchy is the first step towards solving this problem. If the two stories above share some parent concept then the system can begin to recognize them as being duplicative. However, it’s not quite that simple, as many stories could share a parent while still being able to coexist in a narrative. For example, ‘team on a winning streak’ and ‘team on a losing streak’ could both share a ‘streak story’ parent, and yet could make sense in the same article (“The win is the third in a row for Team A, while the loss is the fourth in a row for Team B”).

That brings us to another problem with repetition: dealing with different objects referenced by the stories. Going back to the DeAndre Hopkins story, it’s duplicative to mention that he is both ‘one the best WRs’ and also that he ‘is a good WR’, but it wouldn’t be duplicative to mention that some other WR is good. That said, if you were talking about 5 different players, it might start to get repetitive to mention over and over again that each of them was ‘one of the best’ at their position.

Therefore, the conceptual hierarchy needs to be able to recognize, for any given pair of sentences, the conceptual ‘distance’ that each item is from the other. It can take into account the nature of commonalities between the events in both stories and also look at factors such as whether they are being applied to different objects (which themselves would have a ‘conceptual distance’ between them, e.g. WR is closer to RB than WR is to Team) and also the inherent repetition factor of a given story. Typically, events that are more unique (such as a player scoring their highest total in a stat for the season) are more prone to repetition concerns than something like a team winning or losing a given game, which is bound to happen. In the example above talking about five different WRs, it would sound repetitive to talk about each of them achieving a recent season-high in a stat, even if they were different stats. If you were giving a synopsis about the recent performance of five teams however, it wouldn’t feel as duplicative to mention the won/loss result of each team’s recent game.

Another aspect of ‘distance’ that is important is the distance between each sentence in a narrative or sequence of narratives. A sentence might seem a bit duplicative following directly after a very similar sentence, but might not seem repetitive at all coming two paragraphs later. This is a big potential issue with reports that are in sequence with each other, such as a stock report that goes out every day. There are some things that make sense to mention in each report regardless of whether they appeared the day before, such as the market being up a lot. Other things, such as a given stock having really good analyst ratings, would be tedious if mentioned every single day.

Having balanced all the above complicated issues related to repetition, you run smack dab into another huge problem- what if you WANT something to be repetitious. For example:

The Golden State Warriors weren’t even playing the same game with the Timberwolves on Friday, getting trounced 132-98. Not only did they get blown out, but the loss knocked them out of the last guaranteed playoff spot.

I think this paragraph reads well. However, if you look at the last sentence, it is composed of two parts: (1) team got blown out, and (2) team out of the playoffs. The first part, ‘team got blown out’ was just mentioned in the previous sentence. Therefore, the narrative generation system has to take into account another factor, which registers how a particular piece of information is being used within an article and whether that precludes, or in fact invites, one or more mentions of that same piece of information.

So, we’ve established that good narrative generation software has to balance:

- The conceptual distance between the ‘events’ behind any two sentences

- The conceptual distance between any objects identified in those sentences

- The inherent repetitiousness of each event in the sentences

- The distance between the two sentences within a narrative (or sequence of narratives) and the effect of that distance

- Whether that repetition is even a problem at all or rather is the whole point of the structural arrangement.

Each of these factors are independent dimensions, so they must all be able to be balanced simultaneously.

The worst part is, when it’s done right absolutely nobody notices! When the software creates a paragraph that contains three related sentences that somehow don’t step on each other, we take it for granted, since human brains are so exceptionally tuned to understanding conceptual overlap that we don’t even consciously recognize avoiding repetition as ‘thinking’ at all.

That’s the bad news. The good news is that effectively dealing with repetition has given infoSentience’s technology a big leg up against the competition. It’s not something concrete we can point to, but rather it allows for higher quality, more insightful content to be built in the first place. And while difficult, embedding this intelligence into software allows us to do things that can’t be done by humans. For example, we can personalize repetition for each individual reader in a sequence of reports. Instead of automatically ‘repping out’ a story that appeared in the previous report, we can check to see if an individual read the previous report, and if not, simply skip any repetition issues presented by the previous report. That’s just one of the many ways that automated content can go beyond human capability once you’ve been able to mimic human conceptual thinking.

Machine learning has applications within just about every vertical, from demand forecasting in retail to diagnosing cancer for medical patients. Typically, these machine learning models output their results as a probability of a certain outcome. It might say, for instance, that a particular patient has a 72% chance of lung cancer from looking at their CT scan.

Imagine yourself as a patient in this situation, and you can probably see where the problem is. If you found out you had a 72% chance of having cancer, you would undoubtedly want to know why the machine learning system thought that.

In theory, that’s where Explainable AI (XAI) comes in. XAI would allow someone like a patient or doctor to read a report that explained exactly how the machine learning model came to its conclusion. In practice, however, most machine learning algorithms operate as a ‘black box’, meaning that there is no way for human beings to understand how they came to their conclusions.

There are two key reasons humans have a hard time understanding machine learning models. First, the ‘factors’ that are used by these models usually have no analogue in human thinking. (WARNING: massive simplification ahead) These models typically use a very large number of ‘neurons’ that each separately try to learn an element of the prediction problem. These neurons are randomly assigned to do some calculation, and over time the model iterates until these neurons start to do better at creating an output. Unfortunately, because these neurons are often just a simple calculation along with some numerical weights, they can’t really be described in human terms.

When predicting retail sales, for instance, the machine learning model might end up creating a neuron that tends to heavily weight recent sales values when predicting upcoming values. It wouldn’t actually have a name in the model corresponding to its role, like “recent sales factor neuron.” Rather, it would be some random calculation that just happens, in general, to end up weighing recent sales heavily. Now, a data scientist might be able to look at an individual neuron and roughly figure out what it is doing, but remember there are often 1,000 or more neurons, and models often include several ‘layers’ of these neurons on top of each other, which start to make the role of any individual neuron hopelessly opaque.

One way data scientists have tried to get around this is by using heat maps. When looking at an automated cancer diagnosis, for example, an XAI system can highlight on the CT image where the model is placing the most weight. This is somewhat helpful, but ultimately insufficient because of how all the factors in the model come together. For instance, the model may be concerned with a particular group of pixels in the CT scan, but only because of the relationship between those pixels and another group in a totally separate part of the scan. In that case, it is really the combination of pixels that is important, but the heat map has no way of showing that.

This brings us to the second big problem, which is that even if you could correctly describe the actions of each individual neuron, how could you hope to actually synthesize that information into something that would be easy for a human being to understand? A model with 100+ factors is complicated enough without taking into account that each of those factors is interacting with each other.

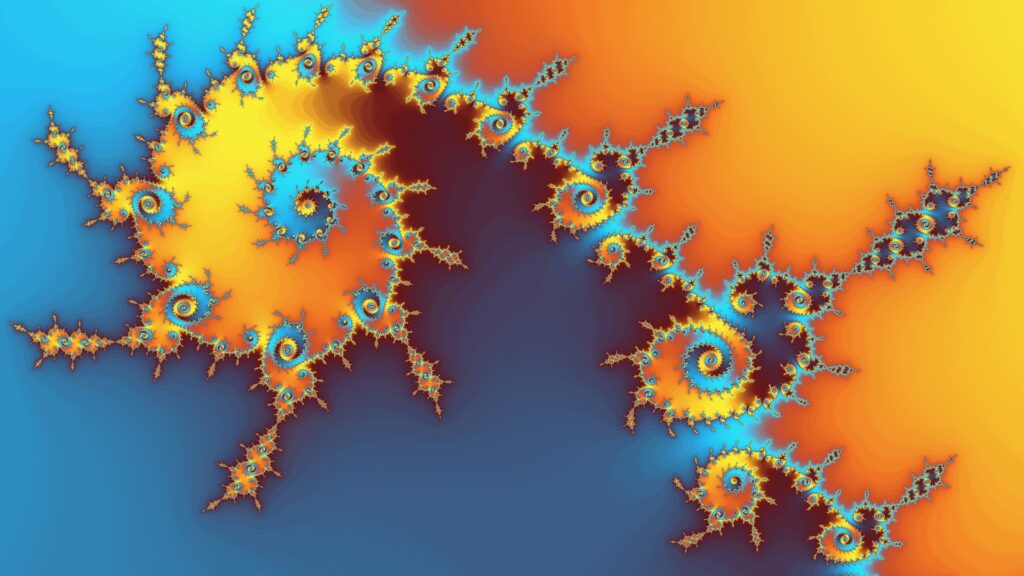

This is where infoSentience’s Fractal Synthesis technology can make a huge difference. In order to understand how Fractal Synthesis works, we first need to take a step back and look at how infoSentience’s technology works in general (how meta, right?). infoSentience has created technology that can analyze any data set, figure out what is most important, and explain what it found using natural language. Critically, this is system is flexible across four key dimensions:

- Time – you can ask the system to center its analysis on any particular period in time and any time interval. For example, you could ask it to give a retail report for the week starting on December 8th, or a quarterly report for the 2nd quarter 2022.

- Subject(s) - you can ask the system to report on a single subject or a group of subjects, and it will not only deliver that report, but include relevant context such as what sub-components within the group were most important, and also how the selected subject fits into other groups within the dataset.

- Length – the system can write more or less information depending on what you want to see. If given less room to write, the system will focus more on the main points. If given more room, it will look to add additional context.

- Interest – the system will be set up with a ‘best guess’ of what a user is most interested in, whether that be particular metrics or types of stories (trends, outlier events, etc.). However, the system can also quickly change how it weights different types of content to tailor the output to a particular use case.

Having this level of flexibility gives the system the ability to report not just on a given data set overall, but on any individual component or sub-set within the data. That’s why we call it Fractal Synthesis- it’s able to apply its algorithms and generate in-depth reports at any level of specificity.

For example, for a given retail data set it could create a three-paragraph report on the top-level results, which might include mentioning that a particular department had done well. If the user was interested in learning more about that department they could create a brand new three-paragraph report just on that department. If an interesting metric was mentioned in that department report, let’s say sales returns for example, the user could create a brand-new report just on sales returns within that department, or zoom out and look at sales returns for the entire company.

You can probably see how this could help solve the critical problems within XAI. Machine learning models are based on hundreds of factors AND their interactions with each other. At the end of the day, these interactions sum up to a number, which might correspond to the topic sentence in a report. In order to contextualize this topic sentence, the Fractal Synthesis technology could dive into all of the factors and use its subject and length flexibility to summarize the most important factors. Since each of the factors summarized is itself made up of multiple components, a user could simply ask the system to ‘dive in’ to that factor to get a new report on its most important subcomponents.

In order to make any of this high-level synthesis possible, the Fractal Synthesis system does need to be able to categorize the ‘work’ that each neuron is doing on its own and in combination. This is a tricky process (currently the biggest limitation of the system), and tends to vary quite a bit depending on the model being used and its targeted output. Fundamentally, however, the system plays the role of the data scientist that is able to examine the output of a single neuron and determine what, approximately, that neuron is doing. The key difference being that once it has that ‘map’ of what every neuron is doing it is capable of quickly synthesizing what the model is collectively doing and explaining that using natural language.

Solving XAI is critical to allow the power of machine learning models to be applied in real-world situations. It is needed because it allows us to: (1) trust AI systems by enabling us to understand and validate the decisions that they make, (2) debug AI systems more effectively by identifying the sources of errors or biases in the system, and (3) identify opportunities for improvement in AI systems by highlighting areas where the system is underperforming or inefficient. Fractal Synthesis technology could be the key to unlocking XAI in complex machine learning models, and I’m looking forward to keeping you informed on our progress in this space.

In the olden days, if you wanted to send out a report to your employees, or an update to your clients, or a letter intended for prospective customers, you only had two options:

Option #1: Mass Communication

You create one piece of content and send this off to everybody. If it was a report, then everybody got the same report. If it was a letter to your clients, then every client got the same letter. This has the benefit of being cheap and fast, allowing you to reach as many people as possible. The drawback is that everybody gets the same content regardless of their particular circumstances.

Option #2: Custom Communications

Every client, potential customer, or employee gets content that is specifically written for them. This has the advantage of making the communication maximally effective for each end user. The cost of course, being that it takes time and money to create each communication. In many cases, it’s not feasible to send out a given number of narratives even with an outsized budget.

But what if you didn’t have to choose the least bad option? That is what’s possible with Mass Custom Communication (MCC), which takes the best parts of each option, allowing you to deliver insightful, impactful reports on a near infinite scale. But before we talk more about what MCC is, let’s talk about what it isn’t. MCC is not a form letter and it is not a ‘Mad Libs’ style narrative. We’ve all been the recipient of communications like that, and they barely register as being customized at all, let alone making us feel like they have been written just for us. This goes double if the narrative or report is part of an ongoing series of communications which all use the same template.

In order to create true MCC, you need two things: (1) a rich data set, and (2) Natural Language Generation (NLG) software that can truly synthesize that data set and turn it into a high-quality narrative. Let’s take these in order. First, you need a rich data set because you must have enough unique pieces of data, or combinations within that data, to write up something different for each end user. In essence, you need ‘too much’ information to fit into a template.

Once you have ‘too much’ information, you need high-quality synthesis from an AI NLG system. This system can go through thousands of data points to find the most relevant information for each end user. It then can automatically organize this information into a narrative with clear main points and interesting context, so that the end user is able to read something that feels unique and compelling to them. For example, a salesperson can get a weekly report that not only tells them about the top-line numbers for their sales this week, but also contextualizes those numbers with trends from their sales history and larger trends within the company.

Individual Outreach

A great example of the power of MCC comes from the world of fantasy sports. For those of you unfamiliar with how fantasy sports work, you and your friends each draft players within a given sport, and then your ‘team’ competes with other teams in the league. It’s sort of like picking a portfolio of stocks and seeing who can do the best.

People love playing fantasy sports, but because each team is unique, and because there are millions of fantasy players, people were never able to get stories about their fantasy league the same way that they get stories about the professional leagues. With the advent of MCC, suddenly they could, and CBS Sports decided to take advantage of it.

For the last 10 years, we’ve written up stories about what happened for every CBS fantasy team every week, creating game recaps, game previews, draft reports, power rankings, and many other content pieces, each with headlines, pictures, and other visual elements. This was actually infoSentience’s first product, so it is near and dear to my heart. We’ve now created over 200 million unique articles which give CBS players what we call a ‘front page’ experience, which covers their league using the same types of content (narratives, headlines, pictures) as the front page of a newspaper. Previously, they could only get a ‘back page’ experience that showed them columns of stats (yes, I realize I’m dating myself with this reference).

Critically, quality is key when it comes to making these stories work. Cookie-cutter templates are going to get real stale, real fast when readers see the same things over and over again each week. Personalization involves more than just filling in names and saying who won, it’s about finding the unique combinations of data and events that speaks to what made the game interesting. It’s writing about how you made a great move coaching AND how that made the difference in your game AND how that means you are now the top-rated coach in your league.

This type of insight is what makes for compelling reading. Open rates for the CBS weekly recap emails we send out are the highest of any emails CBS sends to their users. If you are sending out weekly reports to each of your department managers, or monthly updates for each of your clients, they have to be interesting or they’re not going to be read. If each report is surfacing the most critical information in a fresh, non-repetitive manner, then end users will feel compelled to read them.

Long Tail Reporting

Reports don’t necessarily have to be targeted at individuals in order to achieve scale. You might also just need to write a lot of reports using many subsets of your data. These reports might be targeted towards groups, or just posted onto a website for anybody to read. This might be better labeled Mass ‘Niche’ Communications, but it definitely falls under the MCC umbrella.

CBS has not only taken advantage of MCC for their fantasy product, but has also applied it to live sports. They leverage our automated reporting technology to supplement their newsroom by writing stories they otherwise wouldn’t have time for. Being one of the major sports sites in the US, they obviously have plenty of quality journalists. That still doesn’t mean that they are capable of covering every single NFL, NBA, college basketball, college football, and European soccer match, let alone writing up multiple articles for each game, covering different angles such as recapping the action, previewing the game, and covering the gambling lines.

That’s where our technology comes in. We provide a near limitless amount of sports coverage for CBS Sports at high quality. That last part is once again key. CBS Sports is not some fly-by-night website looking to capitalize on SEO terms by throwing up ‘Mad-Libs’ style, cookie-cutter articles. These write-ups need to have all the variety, insight, and depth that a human-written article would have, and that’s what we’ve delivered. For example, take a look at this college football game preview. If you just stumbled across that article you would have no idea it was written by a computer, and that’s the point.

Our work for IU Health is another example of this type of reporting. We automatically write and update bios for every doctor in their network, using information such as their education history, specializations, locations, languages, and many other attributes. We even synthesize and highlight positive patient reviews. Like with CBS, these bios would be too difficult to write up manually. There are thousands of doctors within IU’s network, and dozens come and go every month. By automating the bios, IU not only saved themselves a great deal of writing, but also made sure that all their bios are up to date with the latest information, such as accurate locations and patient ratings.

Your Turn?

If you are in a situation where you are either (A) not creating all the content you need because you don’t have the workforce, or (B) using generic reports/communications when you would really benefit from having a custom message, then hopefully this article opened your eyes to a new possibility. Mass Custom Communication allows you to have your cake and eat it too. Maybe your use case can be the one I talk about in the next version of this article.

[Note: All of the following concerns AI’s ability to write about specific data sets, something very different from ChatGPT-style natural language generators]

We all know the basics for why good writing is important in business. Decision makers want to read reports that are accurate, impactful, and easy to understand. With those qualities in mind, let’s take a look at this short paragraph:

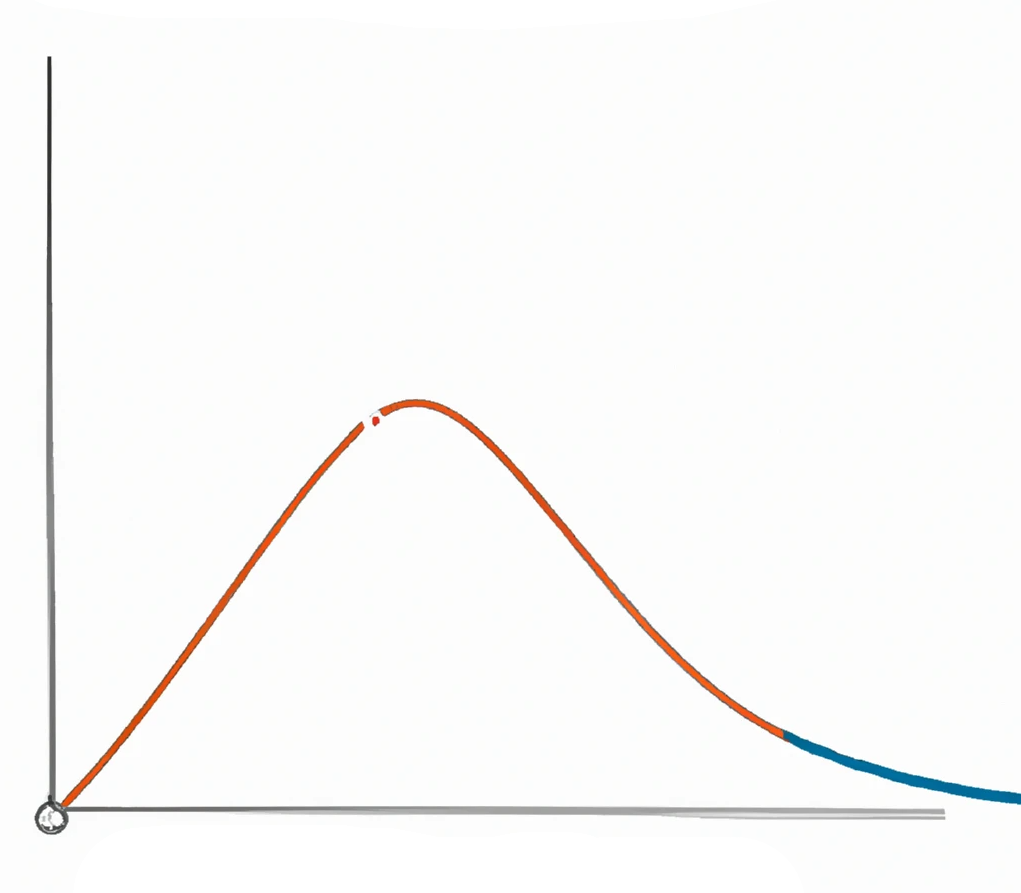

Widgets were down this month, falling 2.5%. They were up the last week of the month, rising 4.5%.

Assuming the numbers are correct, the sentences would certainly qualify as being ‘accurate’. The metrics mentioned also seem like they would be ‘impactful’ to a widget maker. Is it ‘easy to understand’? Here’s where it gets a bit trickier. Both sentences by themselves read fine, but when you put them together, there’s something missing. The writing comes across as robotic. Ideally, we’d want the sentences to read more like this:

Widgets were down this month, falling 2.5%. The last week of the month was a bright spot though, as sales rose 4.5%.

This is conveying the exact same basic information as before: (1) monthly sales down, and (2) last week up. But the sentence contains a critical new word: though. Transition words like ‘though’, ‘however’, ‘but’, and so on play a critical role in helping our brains not just download data, but rather tell the story of what is happening in the data. In this case, the story is about how there’s a positive sign in the time series data, which is also emphasized by using the ‘bright spot’ language.

If a manager was just reading the first example paragraph, it’s likely that they would be able to fill in the missing info. After reading the second sentence their brain would take a second and say “oh, that’s a good sign going forward in the midst of overall negative news.” But having to make the reader write the story in their head is not cost free.

After all, it's not enough to just understand a report. Whether it’s an employee, C-suite executive, client, or anybody else, the point of a report is to give someone the information they need to make decisions. Those decisions are going to require plenty of thinking on their own, and ideally that is what you are spending your brainpower on when reading a report.

This brings me to my alternate definition of what ‘good writing’ really means: it’s when you are able to devote your brainpower to the implications of the report rather than spending it on understanding the report.

So what are the things that we need to allow our brain to relax when it comes to understanding data analysis? There are three main factors, which I call “The Three T’s”, and they are:

- Trust – you have to trust that the numbers are accurate of course, but real trust goes beyond that. It’s not just feeling confident about the stories that are on the page, but also feeling confident that there isn’t anything critical that is being left out.

- Themes – the report can’t just be a series of paragraphs, there has to be a ‘through-line’ that connects everything, allowing you to quickly grasp which stories are central and which ones are secondary.

- Transitions – this can be seen as a mini version of the ‘Themes’ factor. Transitions and other emotional language, as shown in our example above, can help keep your thoughts on track as you make your way through each sentence.

All of these things are hard enough for a human data analyst or writer to pull off (am I extra nervous about my writing quality for this post? Yes, yes I am). For most Natural Language Generation (NLG) AI systems, nailing all Three T’s is downright impossible. Each factor presents its own unique challenges, so let’s see why they are so tough.

Trusting AI

The bare minimum for establishing trust is to be accurate, and that’s one thing that computers can do very easily. Better, in fact, than human analysts. The other key to establishing trust is making sure every bit of critical information will make it into the report, and this is where AI’s have traditionally struggled. This is because most NLGs in the past used some sort of template to create their narratives. This template might have a bit of flexibility to it, so a paragraph might look like [1 – sales up/down for month] [2 – compare to last year (better or worse?)] [3 – estimated sales for next month]. Still, templates like that aren’t nearly flexible enough to tell the full story. Sometimes the key piece of information is going to be that there was a certain sub-component (like a department or region) that was driving the decrease. Other times the key context is going to be about how the movement in the subject of the report was mirrored by larger outsize groups (like the market or economic factors). Or, the key context could be about the significance of the movement of a key metric, such as whether it has now trended down for several months, or that it moved up more this month than it has in three years.

There’s no way to create a magic template that will somehow always include the most pertinent information. The way to overcome this problem is to allow the AI system to work the same way a good human analyst does- by first analyzing every possible event within the data and then writing up a report that includes all of those events. But having this big list of disjointed events makes it that much more difficult to execute on the second ‘T’: Themes.

Organizing the Story

How does the AI go from a list of interesting events to a report that has a true narrative through line, with main points followed by interesting context? The only way to do this is by embedding conceptual understanding into the AI NLG system. The system has to be able to understand how multiple stories can come together to create a theme. It has to be able to understand which stories make sense as a main point and which stories are only interesting as context to those main points. It also needs to be able to have the ability to fit those stories into a narrative of a given length, thereby requiring some stories to be told at a high level rather than going into all of the details.

Writing the Story

Just like how the importance of transitions are a small-scale version of themes, the challenges for dealing with transitions are a small-scale version of themes. That doesn’t make them any easier, unfortunately. The issue with themes is structural- how do I organize all the key pieces of information? The problem with transitions comes about after everything has been organized and the AI has to figure out how to write everything up.

Again, the solution is to have an AI system that understands conceptually what it is writing about. If it understands that one sentence is ‘good’ and the next sentence is ‘bad’, then it is on the path to being able to include the transitions needed to make for good writing. Of course, it’s not quite that simple, as there are many conceptual interactions taking place within every sentence. For example, starting out a sentence with “However, …” would read as robotic if happening twice within the span of a few sentences. Therefore, the AI system needs to find a way to take into account all of the conceptual interactions affecting a given sentence and still write it up properly.

Have no Fear!

These are all complex challenges, but thankfully infoSentience has built techniques that can handle The Three T’s with no problems. Whether it’s a sales report, stock report, sports report, or more, infoSentience can write it up at the same quality level as the best human writers. Of course, we can also do it within seconds and at near infinite scale (Bill Murray voice- “so we got that going for us”).